Why do prediction markets need automated market makers?

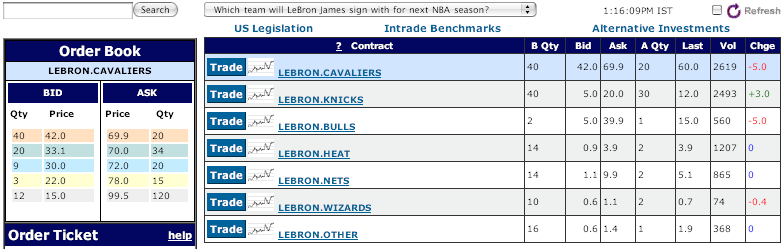

Here’s an illustration why. Abe Othman recently alerted me to intrade’s market on where basketball free agent LeBron James will sign, at the time a featured market. Take a look at this screenshot taken 2010/07/07:

The market says there’s between a 42 and 70% chance James will sign with Cleveland, between a 5 and 40% chance he’ll sign with Chicago, etc.

In other words, it doesn’t say much. The spreads between the best bid and ask prices are wide and so its predictions are not terribly useful. We can occasionally tighten these ranges by being smarter about handling multiple outcomes, but in the end low liquidity takes the prediction out of markets.

Even if someone does have information, they may not be able trade on it so may simply go away. (Actually, the problem goes beyond apathy. Placing a limit order is a risk — whoever accepts it will have a time advantage — and reveals information. If there is little chance the order will be accepted, the costs may outweigh any potential gain.)

Enter automated market makers. An automated market maker always stands ready to buy and sell every outcome at some price, adjusting along the way to bound its risk. The market maker injects liquidity, reducing the bid-ask spread and pinpointing the market’s prediction to a single number, say 61%, or at least a tight range, say 60-63%. From an information acquisition point of view, precision is important. For traders, the ability to trade any contract at any time is satisfying and self-reinforcing.

For combinatorial prediction markets like Predictalot with trillions or more outcomes, I simply can’t imagine them working at all without a market maker.

Abe Othman, Dan Reeves, Tuomas Sandholm, and I published a paper in EC 2010 on a new automated market maker algorithm. It’s a variation on Robin Hanson‘s popular market maker called the logarithmic market scoring rule (LMSR) market maker.

Almost anyone who implements LMSR, especially for play money, wonders how to set the liquidity parameter b. I’ve been asked this at least a dozen times. The answer is I don’t know. It’s more art than science. If b is too large, prices will hardly move. If b is too small, prices will bounce around wildly. Moreover, no matter what b is set to, prices will be exactly as responsive to the first dollar as the million and first dollar, counter to intuition.

Our market maker automatically adjusts its level of liquidity depending on trading volume. Prices start off very responsive and, as volume increases, liquidity grows, obviating the need to somehow guess the “right” level before trading even starts.

A side effect is that predictions take the form of ranges, like 60-63%, rather than exact point estimates. We prove that this is a necessary trade off. Any market maker that is path independent and sensitive to liquidity must give up on providing point estimates. In a way, our market maker works more like real bookies who maintain a vig or spread for every outcome.

The market maker algorithm is theoretically elegant and seems more practical than LMSR in many ways. However I’ve learned many times than nothing can replace implementing and testing a theory with real traders. Final word awaits such a trial. Stay tuned.

I’ve read the paper, and I think the AMM is great! I will soon be implementing a market maker for continuous payoff Nominal GDP contracts, so I have been looking for a good automated market maker. I have thought about modifying your AMM to deal with continuous contracts. Unfortunately I haven’t seen any papers on automated market makers for contracts with continuous payoffs.

There shouldn’t be any need to modify the AMM to handle continuous payouts. The current design provides a current price that is not limited to a binary outcome (0 or 1), so it should work fine wherever the price ends up. What makes you think you need a change to the algorithm?

I think the analysis in the paper (and the analysis in Hanson’s original LMSR paper) shows that if the market’s sponsor provides starting prices closer to the correct outcome, they lose less money (or in the current case, they might earn more money.) In Hanson’s case, the maximum loss to the sponsor comes if the final AMM price matches the correct outcome. I think he explicitly shows this for binary outcomes, but it should hold for continuous outcomes as well.

Is the “much more complex” formula for p_i(q) in 3.3.1 correct?

A different formulation (if I did my maths right), with r_j = q_j/b(q) and then factoring out the various sum_j(q_j)’s and b(q)’s, comes out as:

p_i = [ exp(r_i/a) – sum_j( r_j * exp(r_j/a) ) ] / sum_j( exp(r_j/a) ) + a log( sum_j( exp(r_j/a) ) )

That doesn’t make a lot of sense to me as a formula, though… It just sets the prices to be the LMSR price plus a given amount determined by the overall liquidity, and I’m not quite sure that sum(r*exp(r/a))/sum(exp(r/a)) is always less than a*log(sum(exp(r/a)) in order to make sure the prices always sum to at least one.

But maybe I got my maths wrong?

Using r_j seems interesting from an implementation point of view, because it leaves sum_j(r_j) adding to 1 and keeps all the exponents nicely constrained in magnitude.

I’m definitely going to take a look at your paper. Couldn’t agree more that these markets need to be market made better. I was thinking the same thing when looking at the LeBron market yesterday.

Jason / Smarkets

@jsalvati: Chris is right depending on exactly what you mean by “continuous payoff”. If you want people to predict where a single number will fall within a bounded range, like the IEM vote share markets, then LMSR can work with very little change. If you want people to be able to bet that a number will fall inside any arbitrary interval, like a continuous version of Yoopick, then LMSR will have unbounded loss. The latter problem has no known good solution that I know of.

@Chris: You’re right: the market marker’s expected loss depends on how good the prior is. However most analyses (for better or worse) focus on the worst-case loss.

@aj: Thanks. I’ll have to investigate that further.

@Jason: thanks, great.

s/going to take a look at your paper/throw it at an intern

aj, I’ve looked at this for a bit and I’m not quite sure our formulas are saying different things. In particular, look a little further down that page of the paper at “So without loss of generality we can rewrite the sum of prices as…”. I believe that formula matches up with a slight simplification of the formula you’ve posted.

I also think we’re making the same larger point – that the math terms here look awfully clunky and it’s surprising any reasonable bounds can be produced.

Regarding implementation, I’m not sure where the more nitty-gritty difficulties will arrive here. One problem I did see in the GHPM using the LMSR was math errors caused by taking large e^q values. I believe this market maker is less vulnerable to those kinds of errors.

I believe Intrade already has market makers for a few things, like the stock market closings, or things that can be easily calculated algorithmically. When you’re calculating LeBron’s odds, and there are variables no one can anticipate, Intrade stands to lose if they try to set the prices, so it’s best for them if they just let the betters go at it.

@dissident: thanks. You’re right: any market maker requires a certain amount of risk. I don’t really expect or think intrade should have a market maker for Lebron-like contracts. I’m just trying to say that if your goal is *prediction* rather than profit, a market maker may be worth the risk/cost.

aj: I went through the derivation and obtained the same result as you, albeit without the (1/a) in the exponents. I’m pretty sure the exponents cannot change during the differentiation – did you originally have r_j = q_j / sum_j (q_j) ?

With a little bit of rewriting this differs from the paper in only one term. Where the paper has:

sum_j (q_j * exp(r_j)) / sum_j (q_j) * sum_j (exp(r_j))

the derivation that myself and aj reached has:

a sum_j (r_j * exp(r_j) / sum_j (exp(r_j))

In your simplification you set sum_j (q_j) = 1/a and B(q) = 1 which makes the above terms equal. Since this simplification is used throughout the rest of the paper I think the mistake, if it is one, is confined to 3.3.1 and doesnt affect the actual results.

My previous comment was much easier to read before the whitespace was stripped out. Apologies.

Re my previous comment – those two terms are in fact identical. Im not sure how I missed that.

In 3.3.2: ‘Without loss if generality, we can scale the p_j to define a probability distribution…’

This seems somewhat unjustified, especially since we already have set sum_i q_i = 1 /a . Rather than justifying various rescalings it might be simpler to just carry the value of sum_j exp(r_j) through:

Set k = sum_j exp(r_j)

Set s_j = exp(r_j) / k

Note that s is a distribution

Now: log (sum_j exp(r_j)) – (sum_j (r_j * exp(r_j))) / (sum_j exp(r_j))

= log k – sum_j (r_j * s_j)

= log k – sum_j (log(s_j * k) s_j)

= log k – sum_j (log (s_j) * s_j) – sum_j (log (k) * s_j)

= – sum_j (log (s_j) * s_j)

<= log n

Its effectively the same proof but requires less handwaving about rescaling various terms.

As a side note, there is a wordpress plugin for LaTeX which might be useful if you often discuss mathematics.

http://wordpress.org/extend/plugins/wp-latex/

In 3.3.4 you have (paraphrased):

lim q_i->infintity log (exp (q_i / b(q_i)) + sum_j/=i (exp (1/b(q_i)))

which in the next line is simplified to:

log(exp(1/a))

Since exp(1/b(q_i)) -> 1 it seems to me that this should be:

log(exp(1/a) + n – 1)

Indeed, if you graph C(q_i, 1_-i) / q_i as q_i becomes large it quickly converges to a value > 1. This is particularly noticeable when either a or n is large. For example, with a=1 and n=2 the ratio approaches something in the region of 1.3. This seems intuitively correct – increasing the commission v should decrease the worst case loss.

In the section which establishes that C(q) – q_i > 0 a non-zero bound can probably be obtained when n>1.

If the description of the algorithm is recast in terms of the variables used above we obtain (I think):

Set r_j = q_j / b(q)

Set k = sum_j exp(r_j)

Set s_j = exp(r_j) / k

p_i(q) = s_i + a * H(s)

sum_i p_i(q) = 1 + a * n * H(s)

where H(s) is the entropy of the distribution s. This seems a much more natural description of what the algorithm is doing, especially if the probability distribution s can be given a physical interpretation (perhaps races between exponential variables). In addition many of the properties proved in the paper, cast in this light, rely only on the fact that s is a distribution and ignore its relation to q. I’m going to try to produce a cost function for which treats s simply as an unspecified function of q – it may be that this can be generalised to a class of market makers.

I would be interested to hear your thoughts on this if you have a moment.

why prediction markets? when we have the octopus.

@Jamie, this is fantastic. Thanks. I like your simplification, both in notation and in how to understand what is going on. I think it would be great if you can generalize the results for a broader class of market makers. We tried but failed to make similar “liquidity-sensitive” modifications to other scoring rules.

Update: Actually I spoke too soon: Abe has now discovered some generalizations.

@smart ass: Octopus is sadly retired. Otherwise I agree. 🙂

Thank you for your comments Jamie.

You are correct to note that if we restrict ourselves to only the positive orthant, the sum of prices never quite reaches 1. Instead, for small alpha, we get values that sum very very very close to one (within machine precision) – this is because

a*log(exp(1/a)+n-1) ~ a*log(exp(1/a)) = 1 for small a.

The key here is a comparison between n-1 and exp(1/a), where for the values we consider exp(1/a) >>> n-1. Since small alpha are appropriate for this market maker, the bounds we have are still good in practice. This is something we will be clarifying in the journal version.

Hallo,

I would be happy to know your opinion about advantages of using the new automated market maker for Idea markets which use play money.

Thank you

@Esayas: I think the new market maker and LMSR are especially well suited for play-money markets, since then the fact that the MM can lose money is not a serious issue. The additional fact that the amount the MM can lose is bounded is quite important though even for play money IMO.

A question related to the topic: Are there any applications of automated market makers outside the field of prediction markets?

Good question Panos. I view weatherbill (insurance) and bet365 (gambling) as automated market makers. The reason prediction markets are a good fit is that losing money on average is ok — it can be seen as a payment for information — as long as the loss is bounded. I’m sure there are plenty of automated or semi-automated market makers on Wall Street but I’m not too familiar and probably most of them are unpublished for obvious reasons.

Actually, I was not thinking of cases where the information is the main outcome. I was thinking of using market makers to increase the transaction volume of a market, where the market owners has the incentives to “subsidize” the transaction,.

Think of a Priceline-like scenario: The hotel owner has posted some prices (hidden from the final consumer?). The customer bids a price, which is lower than the lowest price accepted by the hotel, by say $1. In that case, it makes sense to use a “subsidizing” automatic market maker to bridge the gap between bid-ask, and get the transaction to happen. Since the market owner is benefiting by the transaction (e.g., charges a fee equal to x% of the price), then it makes sense to provide the funds that will allow the two parties to transact. Same thing for labor market (e.g. on oDesk I want to hire people at $10/hr, the worker asks for $10.50/hr: the market owner subsidizes the difference, since they make 10% of the overall volume)

There are also the network effects that generate additional benefits when the market is moving. In principle even governments would be interested in doing so, as all transactions generate tax revenue, especially in countries with VAT.

I am not sure if this makes sense. I see strategic considerations here (what to bid in order to take full advantage of the subsidy), the markets do not have any external ground truth (on the other hand, stock markets do not have one either), and other objections.

Any thoughts? I guess there should be other people that have thought about these. They seem pretty straightforward ideas.

Hello, I’m getting through the Flexible Market Maker paper and trying to understand the algorithm changes over LMSR, particularly how the liquidity variable ‘b’ is calculated. In the paper you say it is `alpha * sum(q_i)`, the alpha constant multiplied by total number of shares outstanding? So what happens at the beginning when no shares have been bought yet? Does b = 0? That seems to cause problems in the other formulas.

Thank you for releasing your research and making it so accessible and relatively easy to grasp. I’ve learned a lot in the last day going through some posts and papers. I know this is an old post, but I’m hoping you or someone would be able to give me some insight into whether my line of logic is sound.

The MM models here take into an account any number of possible outcomes, each outcome represented by `q_i`. Correct me if I’m wrong, but it seems like it assumes that one and only one outcome can be correct and cash in, while any shares in the other outcomes eventually become worthless.

I’m thinking of an application using play money where any number of outcomes can end up cashing in at different amounts. I would love for it to be purely trader-powered but if there are not many people playing at first, especially in a market with a large number of stocks, there wouldn’t be much activity.

Here’s a basic example of what I’m thinking: think of each “stock” (not sure what the proper term is so I’ll use stock) corresponding with a contestant at a ring toss game with 500+ contestants. Each player plays the same game individually, and each player’s performance is (for the most part) not affected by any other player’s performance. For each ring a player successfully tosses onto the peg, their stock is worth $1. So if David ends up getting 8 rings on the peg, that corresponding stock will cash in at $8. So there is no real set limit to the total price of all stocks. Would the MM have to act in a very different way than described?

Let’s also say the MM can be fed predictions to base prices on. According to my research and stats, I put an expected value of David’s ring count to be 7.429, for a price of $7.43. Is it possible to use that prediction price as a base, then use the result from a current MM model as a ratio to somehow act on it?

If David sprains a finger on his throwing hand before the game, my prediction would jump down to $3.75. Conversely, if he ate his Wheaties this morning my prediction would change to $9.62. It seems like I would want my base price to have a varying degree of effect on a stock, depending on market size (much as you control the liquidity variable `b` in your model.)

I hope someone can validate or invalidate these thoughts, and hopefully point me in the right direction of where to look for answers. I’m a software engineer looking for a model to best implement into my toy project.