The night of February 15, 2012, was an uncomfortable one for me. Not a natural talker, I was out of my element at a press dinner organized by Yahoo! with journalists from the New York Times, Fast Company, MIT Tech Review, Forbes, SF Chronicle, WIRED, Reuters, and several more [1]. Even worse, the reporters kept leading with, “wow, this must a big night for you, huh? You just called the election.”

We were there to promote The Signal, a partnership between Yahoo! Research and Yahoo! News to put a quantitative lens on the election and beyond. The Signal was our data-driven antidote to two media extremes: the pundits who commit to statements without evidence; and some journalists who, in the name of balance, commit to nothing. As MIT Tech Review billed it, The Signal would be the “mother of all political prediction engines”. We like to joke that that quote undersold us: our aim was to be the mother of all prediction engines, period. The Signal was a broad project with many moving parts, featuring predictions, social media analysis, infographics, interactives, polls, and games. Led by David “Force-of-Nature” Rothschild, myself, and Chris Wilson, the full cast included over 30 researchers, engineers, and news editors [2]. We confirmed quickly that there’s a clear thirst for numeracy in news reporting: The Signal grew in 4 months to 2 million unique users per month [3].

On that night, though, the journalists kept coming back to the Yahoo! PR hook that brought them in the door: our insanely early election “call”. At that time in February, Romney hadn’t even been nominated.

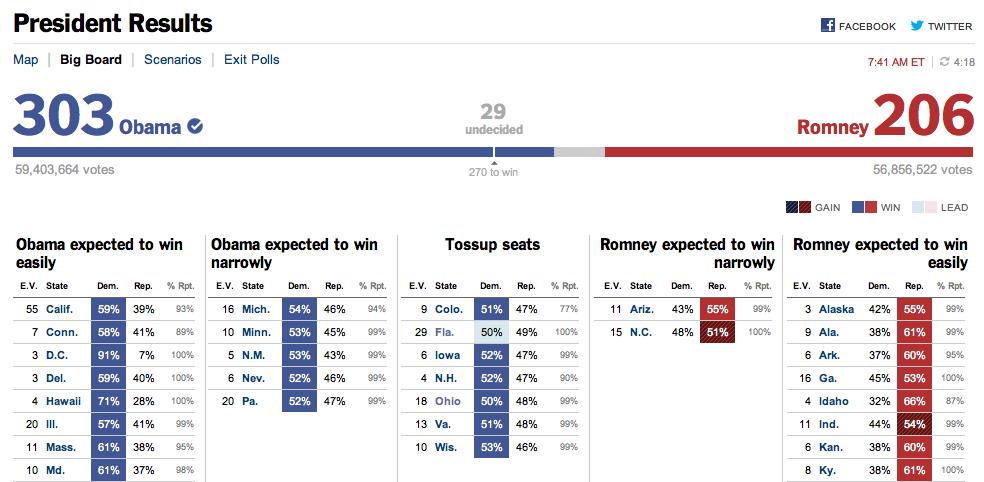

No, we didn’t call the election, we predicted the election. That may sound like the same thing but, in scientific terms, there is a world of difference. We estimated the most likely outcome – Obama would win 303 Electoral College votes, more than enough to return him to the White House — and assigned a probability to it. Of less than one. Implying a probability of more than zero of being wrong. But that nuance is hard to explain to journalists and the public, and not nearly as exciting.

Although most of our predictions were based on markets and polls, the “303” prediction was not: it was a statistical model trained on historical data of past elections, authored by economists Patrick Hummel and David Rothschild. It doesn’t even care about the identities of the candidates.

I have to give Yahoo! enormous credit. It took a lot of guts to put faith in some number-crunching eggheads in their Research division and go to press with their conclusions. On February 16, Yahoo! went further. They put the 303 prediction front and center, literally, as an “Exclusive” banner item on Yahoo.com, a place that 300 million people call home every month.

The firestorm was immediate and monstrous. Nearly a million people read the article and almost 40,000 left comments. Writing for Yahoo! News, I had grown used to the barrage of comments and emails, some comic, irrelevant, or snarky; others hateful or alert-the-FBI scary. But nothing could prepare us for that day. Responses ranged from skeptical to utterly outraged, mostly from people who read the headline or reactions but not the article itself. How dare Yahoo! call the election this far out?! (We didn’t.) Yahoo! is a mouthpiece for Obama! (The model is transparent and published: take it for what it’s worth.) Even Yahoo! News editor Chris Suellentrop grew uncomfortable, especially with the spin from Homepage (“Has Obama won?”) and PR (see “call” versus “predict”), keeping a tighter rein on us from then on. Plenty of other outlets “got it” and reported on it for what it was – a prediction with a solid scientific basis, and a margin for error.

This morning, with Florida still undecided, Obama had secured exactly 303 Electoral College votes.

Just today Obama wrapped up Florida too, giving him 29 more EVs than we predicted. Still, Florida was the closest vote in the nation, and for all 50 other entities — 49 states plus Washington D.C. — we predicted the correct outcome back in February. The model was not 100% confident about every state of course, formally expecting to get 6.8 wrong, and rating Florida the most likely state to flip from red to blue. The Hummel-Rothschild model, based only on a handful of variables like approval rating and second-quarter economic trends, completely ignored everything else of note, including money, debates, bail outs, binders, third-quarter numbers, and more than 47% of all surreptitious recordings. Yet it came within 74,000 votes of sweeping the board. Think about that the next time you hear an “obvious” explanation for why Obama won (his data was biggi-er!) or why Romney failed (too much fundraising!).

Kudos to Nate Silver, Simon Jackman, Drew Linzer, and Sam Wang for predicting all 51 states correctly on election eve.

As Felix Salmon said, “The dominant narrative, the day after the presidential election, is the triumph of the quants.” Mashable’s Chris Taylor remarked, “here is the absolute, undoubted winner of this election: Nate Silver and his running mate, big data.” ReadWrite declared, “This is about the triumph of machines and software over gut instinct. The age of voodoo is over.” The new news quants “bring their own data” and represent a refreshing trend in media toward accountability at least, if not total objectivity, away from rhetoric and anecdote. We need more people like them. Whether you agree or not, their kind — our kind — will proliferate.

Congrats to David, Patrick, Chris, Yahoo! News, and the entire Signal team for going out on a limb, taking significant heat for it, and correctly predicting 50 out of 51 states and an Obama victory nearly nine months prior to the election.

Footnotes

[1] Here was the day-before guest list for the February 15 Yahoo! press dinner, though one or two didn’t make it:

-Â New York Times, John Markoff

-Â New York Times, David Corcoran

-Â Fast Company, EB Boyd

-Â Forbes, Tomio Geron

-Â MIT Tech Review, Tom Simonite

-Â New Scientist, Jim Giles

-Â Scobleizer, Robert Scoble

-Â WIRED, Cade Metz

-Â Bloomberg/BusinessWeek, Doug MacMillan

-Â Reuters, Alexei Oreskovic

-Â San Francisco Chronicle, James Temple

[2] The extended Signal cast included Kim Farrell, Kim Capps-Tanaka, Sebastien Lahaie, Miro Dudik, Patrick Hummel, Alex Jaimes, Ingemar Weber, Ana-Maria Popescu, Peter Mika, Rob Barrett, Thomas Kelly, Chris Suellentrop, Hillary Frey, EJ Lao, Steve Enders, Grant Wong, Paula McMahon, Shirish Anand, Laura Davis, Mridul Muralidharan, Navneet Nair, Arun Kumar, Shrikant Naidu, and Sudar Muthu.

[3] Although I continue to be amazed at how greener the grass is at Microsoft compared to Yahoo!, my one significant regret is not being able to see The Signal project through to its natural conclusion. Although The Signal blog was by no means the sole product of the project, it was certainly the hub. In the end, I wrote 22 articles and David Rothschild at least three times that many.